Working with data

When data has been retrieved Treble offers ways of working with the data within the SDK. The impulse response classes offer postprocessing classes such as device/binaural rendering, filtering, convolving with audio and summing.

On this page we will show you examples of how to work with data within the SDK.

Device/Binaural rendering

The SpatialIR class has a method to render a device signal.

This device can be anything that contains one microphone or more and has been imported into Treble's device library.

This can thus for example be either a Head Related Transfer Function (HRTF) to render binaural audio or, similarly, a Device Related Transfer Function (DRTF) describing a device with an array of microphones.

Rendering the signal can be done like this

spatial_ir = results.get_spatial_ir(source=source, receiver=receiver)

device = tsdk.device_library.get_by_name("hrtf_device")

device_ir = spatial_ir.render_device_ir(device=device, orientation=treble.Rotation(azimuth=40))

where device is some device from the device library.

The output of the rendering is an instance of the DeviceIR class which has all the same functionality as the MonoIR class as well as the option of creating a new device render

new_device_ir = device_ir.change_device(

device=device,

orientation=treble.Rotation(azimuth=0, elevation=10, roll=25)

)

Devices created later than mid November 2025 will have a max_frequency property which describes the maximum frequency before the spherical harmonics used to fit the device start to deviate from the input data.

By default, renders using such devices will be low-pass filtered at that frequency to ensure validity of the output DeviceIR.

This filter can be disabled in two ways; either by passing the argument ignore_max_frequency=True to the rendering method, or by linearizing the phase of the device upon device creation which linearizes the phase above the maximum frequency.

Batch rendering

Several IRs can be rendered in batch for easy data augmentation i.e. if multiple orientations of the same device is wanted. A list of IRs and a list of rotations can be send to treble.batch_render_device_ir() along with a list of devices that should be rendered in.

By default, a render will be executed for every combination of devices, IRs and rotations. When using the optional orientation_application=zipped parameter the number of IRs and rotations must be the same and for every device, a combination for IR[0..n] and rotation[0..n] is rendered.

sim = proj.get_simulation('Sim-ID')

res = sim.get_results_object()

spatial_ir1 = res.get_spatial_ir(sim.sources[0], sim.receivers[0])

spatial_ir2 = res.get_spatial_ir(sim.sources[0], sim.receivers[1])

spatial_ir3 = res.get_spatial_ir(sim.sources[0], sim.receivers[2])

device1 = tsdk.device_library.get_device("device1-ID")

device2 = tsdk.device_library.get_device("device2-ID")

rotations = [

treble.Rotation(0., 0., 15.),

treble.Rotation(0., 15., 15.),

treble.Rotation(15., 15., 15.),

treble.Rotation(15., 15., 15.),

treble.Rotation(25., 15., 15.),

treble.Rotation(25., 25., 15.),

treble.Rotation(25., 25., 25.),

treble.Rotation(45., 15., 15.),

treble.Rotation(45., 30., 15.)

]

res = treble.batch_render_device_ir([device1, device2], [spatial_ir1, spatial_ir2, spatial_ir3], rotations)

Output data structure

The output of the batch rendering function is a dictionary that organizes the rendered impulse responses (IRs) by device, input IR, and rotation. Consider the following scenario, where we render N spatial impulse responses to M devices with R head rotations.

Structure overview

{

"<device_name_1>": [

[IR_1_rot_1, IR_1_rot_2, ..., IR_1_rot_R], # Results for input IR_1

[IR_2_rot_1, IR_2_rot_2, ..., IR_2_rot_R], # Results for input IR_2

...

[IR_N_rot_1, IR_N_rot_2, ..., IR_N_rot_R], # Results for input IR_N

],

"<device_name_2>": [

[IR_1_rot_1, ..., IR_1_rot_R],

...

],

...

"<device_name_M>": [...]

}

Explanation of data structure

- Dictionary keys : (<device_name_X>): The names of the devices (e.g., "dummy_head", "ar_glasses", "smart_speaker").

- Top-level list (length N): Each element corresponds to one of the N input spatial impulse responses.

- Inner List(length R): Each element is the rendered impulse response for a specific rotation of the given device and input IR.

- IR values (IR_n_rot_r): These are the

DeviceIRobjects, i.e., the IRs recorded at the microphones of your device. For example, for the dummy head device, the DeviceIRs are binaural room impulse responses.

Example

If you provide 2 input spatial IRs (N=2), 2 devices (M=2), and 3 rotations (R=3), the output will look like this:

{

"dummy_head": [

[IR_1_rot_1, IR_1_rot_2, IR_1_rot_3], # Input IR 1 rendered on dummy head

[IR_2_rot_1, IR_2_rot_2, IR_2_rot_3] # Input IR 2 rendered on dummy head

],

"ar_glasses": [

[IR_1_rot_1, IR_1_rot_2, IR_1_rot_3], # Input IR 1 rendered on AR glasses

[IR_2_rot_1, IR_2_rot_2, IR_2_rot_3] # Input IR 2 rendered on AR glasses

]

}

Fetching the 3rd rotation of the 2nd IR for the AR glasses and plotting it is then straightforward

res["ar_glasses"][1][2].plot()

Summing impulse responses

If, for example, you are working with multiple sources where you are interested in the combined response of the two, all the IR classes have the + operator overloaded in such a way that you can sum the two impulse responses directly from the IR objects.

mono_ir = results.get_mono_ir(source=source, receiver=receiver)

mono_ir2 = results.get_mono_ir(source=source_2, receiver=receiver)

summed_ir = mono_ir + mono_ir2

This takes care of all the potential time alignment and handling of zero padding for you and simply returns another MonoIR object.

This works just as well with other IR classes.

Filtering

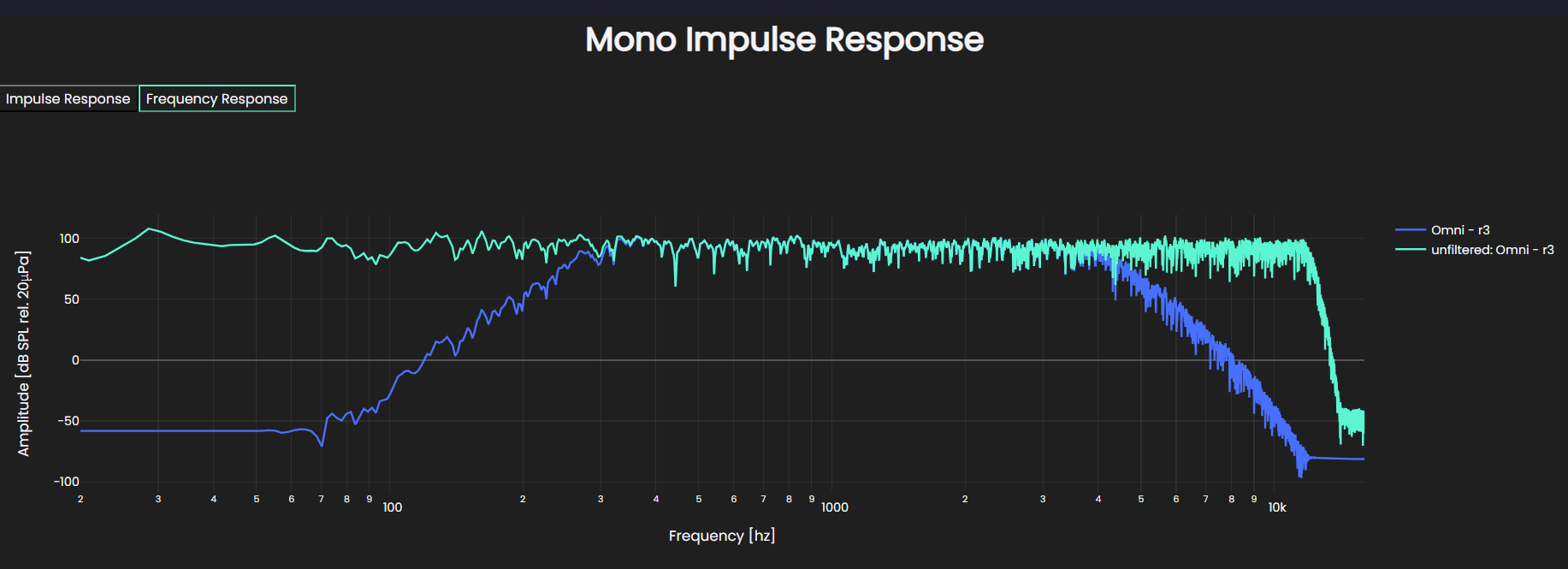

We offer a method of filtering the impulse responses via, either the provided filter definitions or by constructing your own filter and applying to the IR objects. The IRs can either filter by a single filter or a list of filters which will be applied sequentially.

filtered_ir = mono_ir.filter(

[treble.ButterworthFilter(hp_order=6, hp_frequency=300), treble.ButterworthFilter(lp_order=6, lp_frequency=4000)]

)

filtered_ir.plot(comparison={"unfiltered":mono_ir})

We offer a collection of other filter types OctaveBandFilter, GainFilter, FIRFilter, and IIRFilter.

We have also made it simple to define custom filters.

There is a class called FilterDefinition and by creating a filter class which inherits from FilterDefinition and has a filter() method which takes in a numpy array and sampling rate, and returns a numpy array, it should work directly on the Treble IR objects.

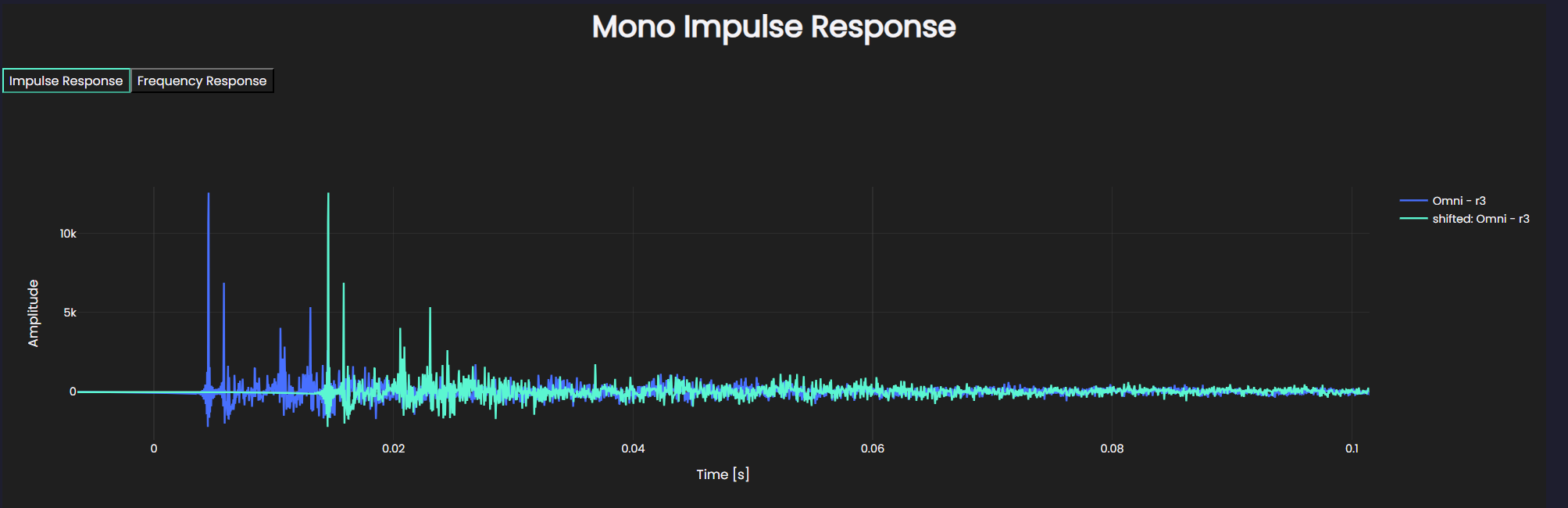

As an example we can define a custom delay filter class here which, for simplicity takes in one dimensional signal and shifts it in positive time:

import numpy as np

class DelayFilter(FilterDefinition):

def __init__(self, delay_seconds):

self.delay_seconds = delay_seconds

assert self.delay_seconds >= 0

def filter(self, data: np.ndarray, sampling_rate: int) -> np.ndarray:

delay_samples = int(np.round(self.delay_seconds * sampling_rate))

delayed_data = np.zeros(len(data) + delay_samples)

delayed_data[delay_samples:] = data

return delayed_data

# Apply filter to mono ir signal (single channel)

mono_ir_shifted = mono_ir.filter([DelayFilter(0.01)])

mono_ir.plot(comparison={"shifted":mono_ir_shifted})

Convolve with Audio

Treble provides a way to load in external audio to convolve with the simulated impulse responses.

loaded_audio = treble.AudioSignal("some_audio.wav")

This can then be convolved with an impulse response and played out loud.

convolved_audio = mono_ir.convolve_with_audio_signal(loaded_audio)

convolved_audio.playback()

This can also be done with binaurally rendered signal. Lets say we have a simulation with two sources and want to listen to the combined effect of them both.

spatial_ir_1 = results.get_spatial_ir(source="s1", receiver="r1")

spatial_ir_2 = results.get_spatial_ir(source="s2", receiver="r1")

# Render the binaural responses using some hrtf device

binaural_ir_1 = spatial_ir_1.render_device_ir(hrtf_device)

binaural_ir_2 = spatial_ir_2.render_device_ir(hrtf_device)

# Convolve with audio

audio_1 = binaural_ir_1.convolve_with_audio_signal(loaded_audio)

audio_2 = binaural_ir_2.convolve_with_audio_signal(loaded_audio)

# Sum the two and play the resulting sound

(audio_1 + audio_2).playback()

This covers the basics of what we offer as tools to process your results within the SDK. We are happy to hear your suggestions on what could benefit your workflows so do not hesitate to reach out with feature requests.