Use Case: Synthetic Data Generation for Machine Learning

The Treble SDK is a powerful tool designed to simplify the creation of high-quality acoustic training data. It enables the simulation of realistic acoustic scenes, incorporating complex materials, geometries, furnishings, directional sound sources, and microphone arrays. By leveraging these capabilities, developers can enhance audio machine learning (ML) algorithms for tasks such as speech enhancement, source localization, blind room estimation, echo cancellation, room adaptation, and generative AI audio. Independent research has validated the benefits of wave-based synthetic acoustic data, demonstrating its ability to significantly improve ML performance. See independent research here.

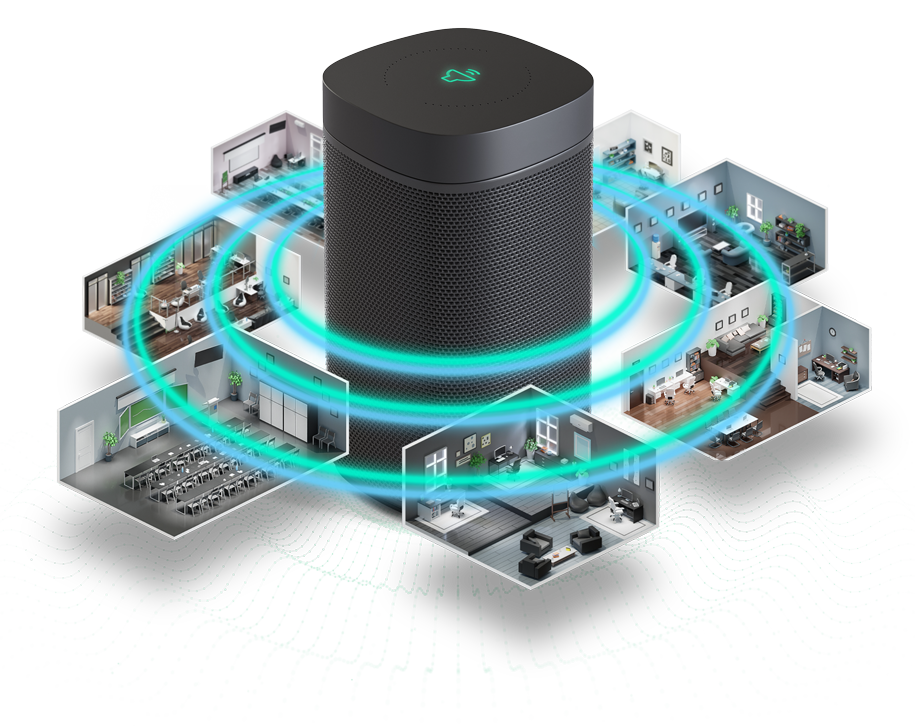

Large dataset with device specific impulse responses for ML training

In this example from the webinar in May 2025 we show how to create device specific impulse responses for a large dataset. In this example we create datasets using a smartspeaker with different microphone configurations. This means that the only variable in the dataset is the device. It is then straightforward to train two ML algorithms and evaluate the performance of the two configurations.

The device specific data generation is examplified in the below video. Here audio data is generated for two different microphone array configurations. Further down you can download the presented notebook and try the data generation on your own.

Large dataset for ML training

In this tutorial, we show how to set up a batch simulation in Treble using geometries from our geometry database.